GeoLegal Notes - Weekly 3: LegalWeek & Politics of AI Regulation by Sean West / Hence Technologies

LegalWeek and AI politics. Also: EU AI Act leaks, US AI regulation, ChatGPT destruction, Hence asks Rwanda's President about AI, and a judge clones his voice for a podcast.

Last week I puzzled over the lack of GC representation at Davos and this week is the Davos of legal tech - LegalWeek. I counted around 90 sessions at LegalWeek, with 30 of them having the word AI in the title. The rest of them have AI references and groan-inducing AI jokes (“I thought I’d use ChatGPT to write my speech”-Too Many People).

What’s interesting is that for all the focus on the use of AI, there’s little focus on the environment in which companies will be deploying AI. That is to say, as AI innovators and cheerleaders wow attendees with their latest wares, potential customers are left unable to fully understand the realm of the possible because they have a blind spot on the broader economic and political context of deployment.

How will fears of mass surveillance and AI powered weapons affect the way AI can be used in the legal sector?

What AI tools will be outlawed for use in legal services?

What obligations will AI producers and users have when deploying said systems?

Do I need to worry about EU obligations if I’m in the US and vice versa?

If you care about those questions, you’ve come to the right place. Starting today, I’ll be interviewing thought leaders in a 3 minute rapid fire format on the topics of each newsletter. Today, I launch this video series with Kevin Allison of Minerva Technology Policy Advisors.

Chat with President of Minverva Policy Advisors

Kevin is one of top minds on forecasting AI policy. In our video chat he unpacks the different global approaches to AI as well as the legal and regulatory risk of using AI. Check it out and then read on for how lawyers think AI should be regulated and how the US and EU are actually regulating it.

Government vs the Profession

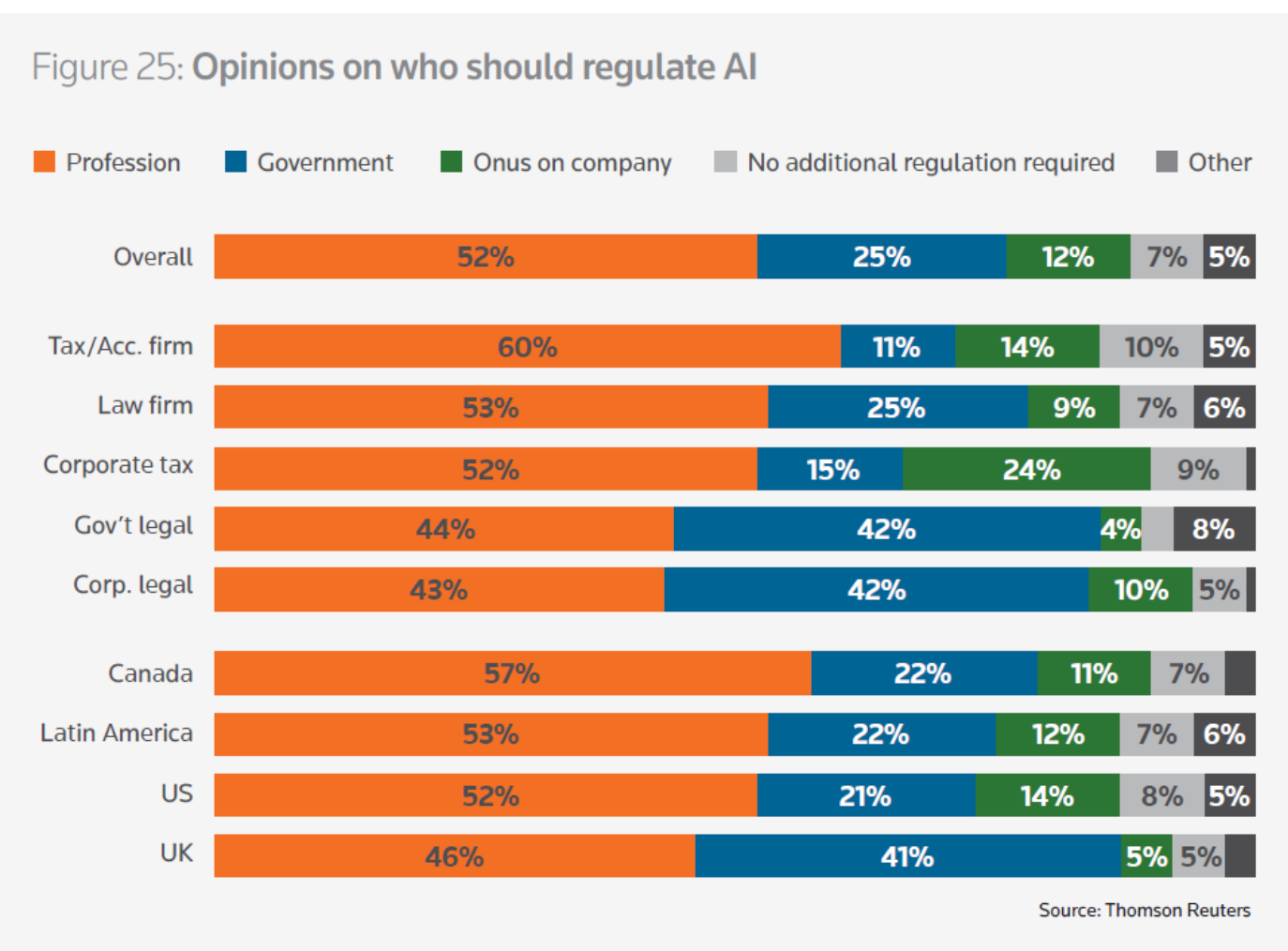

Thomson Reuters recently asked service firm professionals who they think should regulate AI. A startling result emerges: Many more law firm lawyers believe the profession should regulate AI rather than government. Even government and corporate legal respondents narrowly lean in that direction. I guess its not that surprising given the strength of self-regulation of the legal sector writ large. But it’s also not plausible.

AI is literally powering weapons, deepfakes are affecting elections, facial recognition is allowing mass surveillance, and generative AI is threatening to shift norms around copyright protection. Politicians are not going to stand aside and delegate the trajectory of AI in the legal sector to state bar associations. They are going to grapple with policy complexity, regulate with blunt instruments, and grandstand their way to complex and often inconsistent regimes.

And, of course, governments are going to enforce existing rules, as Kevin outlined above. For instance, the US SEC and FTC have both recently warned they will be enforcing against “AI washing”, an uncreative spin on “greenwashing,” where companies inflate their AI capabilities in advertisements or disclosures. That may make legaltech founders think twice about faking it until you make! But also is a warning shot for end-users about how to discuss the impacts of AI on their bottom lines.

Leak of the EU AI Act

Europe has been grappling with AI regulation for years and reached a breakthrough set of political bargains in December around the first global comprehensive AI act. The act requires additional negotiation and will not come into affect for a couple of years, but a leaked version shows how governments grapple with legislating against present and future AI risks (you can download and skim it below).

The EU is in the lead on regulation because it has the political will to act. The AI act prohibits some AI activities, for instance, social scoring systems like those available in China as well as the law enforcement use of real-time remote biometric identification in all but specifically outlined law enforcement purposes. But what about in the legal services and justice space?

The act is specific in drawing a box around how AI can be used in the legal system and imposing real obligations on technology companies who develop systems for deployment in the EU. Basically, much AI legal software will be classified as high-risk and have to comply with a raft of complex and onerous obligations or risk up to a 7% revenue fine. Allen and Overy has a good breakdown of the act in general, though it’s not specific to legaltech or justicetech.

The AI act specifically highlights how such systems can impact “democracy, rule of law, individual freedoms as well as the right to an effective remedy and to a fair trial”. These include “AI systems intended to be used by a judicial authority or on its behalf to assist judicial authorities in researching and interpreting facts and the law and in applying the law to a concrete set of facts”.

AI for dispute resolution is considered high-risk when there are legal effects for the parties. Judges can use AI to support their decision making but final decisions must be a “human-driven activity and decision.” There is a carve out for AI used for “ancillary administrative activities that do not affect the actual administration of justice in individual cases.”

Moreover, “Natural persons should never be judged on AI-predicted behaviour based solely on their profiling, personality traits or characteristics."

As I wrote in my 2024 GeoLegal Outlook (below), this poses a real question about how far and how fast tech companies should go in developing such technology if they will have clients in Europe. Enforcement of the Act will not happen for years but other countries will follow suit and mimic the act, as happened after GDPR - and they could enforce sooner.

I generally feel like this shouldn’t send a chill through the justicetech and legaltech industries but awareness and planning of compliance obligations is critical for companies to preserve any first-mover advantages they establish.

This raises further questions for the users of said products - those corporations and law firms, large and small, that will be clambering to learn about all the latest innovations at every legal conference for the rest of the decade.

My general feeling is that unless the business model of the company is exploitative in using the AI for prohibited or high-risk purposes, the regulatory risk is low so long as buyers make sure their vendors are compliant. One benefit of the act that will have global ramifications is that it will increase the documentation requirements of AI producers, particularly of foundational models. This will reduce the “black box” issues that are holding back implementation in many contexts including some law departments.

US Regulation and ChatGPT Destruction?

But what if instead of regulating AI, a government ordered to destroy it? This could happen in the US. OK, stick with me for the boring part to justify the sensational headline.

On the sleepier side, the US is taking the approach of articulating broad principles, with some level of assent from major tech players, and with blanks to be filled in later - on a sector by sector basis. For instance, on justice issues, President Biden’s executive order said the US will:

Ensure fairness throughout the criminal justice system by developing best practices on the use of AI in sentencing, parole and probation, pretrial release and detention, risk assessments, surveillance, crime forecasting and predictive policing, and forensic analysis. - White House

That’s very different from the EU approach which is much more sweeping and prescriptive, probably because the US can’t actually get legislation passed in this climate, as Kevin outlines above. However, the US is starting to fill in the blanks, for instance, this week publishing a draft rule to force cloud computing companies to “know their customers” when it comes to foreign companies using their cloud for AI applications. The idea here is that the US is prohibiting the export of chips to countries like China due to fears that US hardware could serve as the basis for AI programs that run counter to US interests so shutting down the cloud is one more way to thwart such an outcome.

But while US regulators are moving slowly and in a piecemeal fashion, the courts will soon have an opportunity to shut down ChatGPT in one fell swoop. The New York Times has filed a suit against ChatGPT for copyright infringement and has asked for destruction of ChatGPT as the remedy. João Marinotti writes in The Conversation that courts are able to order destruction under copyright law but that this is unlikely. In reality, a company worth $100bn has a lot of space to negotiate a settlement with affected parties rather than face losing the core product responsible for that valuation.

But it’s still an interesting thought experiment: As the rules around AI come into focus, what if particular services are shut down by the courts or politicians? Would you have optionality to go with other services or would that present a catastrophic risk if you had implemented the technology?

AI in Rwanda

Those of you that know Hence Technologies know that our core engineering team is based in Kigali, Rwanda. Rwanda is interesting because it has a host of top regional universities like Carnegie Mellon University Africa and African Leadership University but also because it has been on the forefront of African countries in articulating an AI strategy. Recently, our Rwanda Managing Director Vicky Akaniwabo had the chance to ask Rwanda’s President Paul Kagame about his approach to regulating AI, which you can watch below (she’s at the 16 second mark).

What (Cloned Voices) I’m Listening to

OK I opened by poking fun at everyone joking that ChatGPT wrote their speech at LegalWeek. But what about if a US judge used ChatGPT to produce a podcast featuring his own cloned voice? That would be pretty cool and unexpected.

That’s why I’ve been kind of fascinated with Judge Scott Schlegel’s new podcast, Tech and Gavel - which is basically AI generated and voice cloned. On first pass, it’s a little disconcerting because it sounds so real. Rather than talking about the risk of voice cloning, Judge Schlegel gives you the chance to hear what it sounds like and then highlights how it could wreak havoc in the court system - for instance, in domestic trials that are highly emotional where the fabrication of evidence could really swing an outcome. Listen here.

In coming days, I’ll be tackling supply chain risk and the evolving ESG landscape. If you’d like to participate in my 3 minute interview series, let me know! Thanks to Mia Tellmann for her assistance with this newsletter. And please tell your friends to subscribe.

—SW