GeoLegal Weekly #17: Toward the Legal Singularity or an AI-driven Sea of Junk?

Huge advances in legal technology give rise to the idea we are headed toward a Legal Singularity. But what if we're just going to be swimming in a sea of bad AI-generated legal advice?

Today, I’ll engage with the idea of an oncoming “Legal Singularity” where the law is perfectly transparent and predictable. There are few better places to contemplate such scenarios than Stanford’s CodeX Future Law 2024 conference, which took place a couple of weeks ago. The emphasis on the intersection of government, law and technology was strong: it’s probably the only place where one can stumble upon a legal tech founder tapped to run for US Vice President!

Picking up on some of the themes from CodeX, I interviewed Stanford Professor David Freeman Engstrom, who gave a fabulous presentation on the future of legal technology. The interview, which underpins much of this week’s analysis, is well worth watching in full.

The Legal Singularity

Technology is driving huge change in the legal system and the potential for full automation is a really disruptive idea. Abdi Aidid and Benjamin Alarie have an excellent new book, The Legal Singularity: How Artificial Intelligence Can Make Law Radically Better, which makes the case that we are on a pathway to the law becoming fully predictable. That is to say, that when everyone has the same legal data and computing power, all legal actors - judges, lawyers, accused, defendants, private adjudicators - will know the way the law will be interpreted in advance making the law effectively automatic and allowing incomparable efficiency and fairness.

To give you a sense of what this world would look like, consider this. What if the world was all red light cameras everywhere you step? That is to say, what if the internet of things and government surveillance systems were designed to report on you every time you broke a rule or simply issue you fines? If you speed in your car, it is connected to highway patrol so a ticket is automatically issued. If you cause a fire by burning your toast, the toaster reports this to the authorities and your insurance company so your liability is clear.

That’s a lot like big brother and Western societies will balance concerns about privacy against movement in that direction. But it is undeniable the technology will exist to do this, if it doesn’t already. And if it did, the need for long-drawn out court proceedings is reduced because we would all know the outcome in advance.

Moreover, it could create a fairer court system. For as much concern as there is about biased AI, we need to remember that human judges have their own biases, which AI can potentially mollify. As one assessment of Estonia’s digital court reforms put it:

judges are black boxes: it is impossible to read the mind of human judges. This highlights the opacity of both human and AI-driven decision-making. Moreover, human judges can make mistakes similarly to machines. For instance, judges can be biased and infuse their inner preferences in their judgments.

Engstrom’s research is more focused on the medium-term but we discussed this concept in the interview above, which he referred to as the rise of “hyperlexis” - or a legal system where law is pervasive and enforcement is frictionless and automatic. As he noted, there’s a reason we don’t enforce all rules all the time today: There are equitable values in the law like mercy and extenuation that we lose if make the law automatic. A lot of that goes away if courts become server farms, an evocative image conjured up in a paper he wrote with Jonah Gelbach looking at the impact of legal tech on civil procedure.

For my part, the more that the law becomes automatically enforced, the more law starts to lose the values it is imbued with and becomes a game ripe for gaming - generating new sources of risk. How do aggressive drivers deal with red light cameras? They speed up to beat the camera, trading a violation they are likely to be caught committing for one they think they can get away with. That type of trade-off probably creates more risk of an accident with another car or a pedestrian at high speed than continuing through the intersection for a split second too long. But the driver accepts that risk to try to beat the system. The more legal enforcement moves in this direction, the more we’ll have to think about where risk is transferred in the system.

To figure out if we’re headed toward the legal singularity, we need to consider a few building blocks. First, will governments and courts liberalize the legal sector? Second, will governments be willing to fully deploy AI? Third, will the way AI is used knock back progress - whether it is weaponized or simply generates garbage legal advice?

Liberalization of the legal sector

For AI to be able to truly shift the legal system, we will need reform of rules on unauthorised practice of law and non-lawyer ownership of law firms. The battle on liberalization has long been a tension between access to justice advocates on the pro-side and plaintiff’s firms blocking progress. This is changing.

As Engstrom tells me, regulatory reform is creating strange bedfellows. In Texas, on the left, you have traditional access to justice advocates. But on the right you also have conservative Republican forces that simply love deregulating industries. On the latter point, legal is one of the most regulated sectors on the planet, so if you’re looking to shrink government reach you could do worse than “keep your government hands off my legal system” (know your meme.) Elsewhere, I argue that private equity - which wants to buy law firms - and big tech companies, which will want their tech to get closer and closer to the practice of law, will increasingly become forces for reform. And, of course, technology companies are well known to view regulation as “break it so they can’t make it” - that is to say, they are willing to break existing rules to prove out tech, and then leverage pressure of users to increase chances for reform.

You also have the fact that reforms in Utah and Arizona have seen a lot of uptake by lawyers themselves with innovative business models, a point Engstrom, Lucy Ricca, Graham Ambrose and Maddy Walsh raise in their must-read milestone report on the landscape of these states after they reformed their legal system. This splinters the political wisdom that lawyers are generally against reform - not all will be and some can become highly motivated advocates.

AI in Government

Engstrom has done some really interesting research on what he calls “algorithmic governance,” or the use of AI to support government decision-making and implementation, along with Daniel Ho, Catherine Sharkey and Mariano-Florentino Cuéllar. The report surprised me by noting that 45% of the US government is already experimenting with AI. I was not surprised that they also noted most of this is pretty low level and unsophisticated. Of course, breadth and sophistication will only increase from here.

The implications of government using AI are worth considering, and I draw here from Engstrom’s report above. First, there is the risk that this widens the technology gap between public and private entities. After all, if the government is going to govern by algorithm, private sector entities will deploy large amounts of capital to stay one step ahead. This raises the second point which is that not every private sector entity has access to the same amount of capital, which raises a distributive concern. That is to say that large companies will be able to employ more technology and bigger legal teams (internal and external) in attempts to comply or beat the system. That means smaller entities are more likely to bear the force of enforcement: “An enforcement agency’s algorithmic predictions, for example, may fall more heavily on smaller businesses that, unlike larger firms, lack a stable of computer scientists who can reverse-engineer the agency’s model and keep out of its cross-hairs,” they write.

The issue here is this can raise a concern that the system is rigged with big companies evading consequences - which could undermine support for AI in government from the grassroots level. So, we have to consider that as we roll out more technology, we risk triggering backlash against the tech itself based on distributional impacts.

The Sea of Junk

Both the pressure for liberalization and experimentation within government would indicate that we’re moving further down the path toward the legal singularity. The problem is we are likely to get caught swimming in what Engstrom, citing the National Center for State Courts, refers to as the “Sea of Junk.” That is to say that even though we see some really good products for legal built on top of large language models, we’re also going to see a ton of garbage products and advice. The internet has long been littered with Reddit forums and self-help bulletin boards that include bad or incomplete legal advice and information. You don’t need to be an engineer to understand that training algorithms on bad advice doesn’t increase the quality of the underlying advice. So we’re not just going to see careless lawyers citing hallucinated cases, we’re actually going to see real people try to defend themselves in court with real - but ineffective - legal strategies surfaced by AI legal products. And if they fail in court, that may have lifelong implications. Engstrom notes that if courts banned the use of ChatGPT for specific legal purposes because of quality issues, in one fell swoop many of the products on the market would be knocked out.

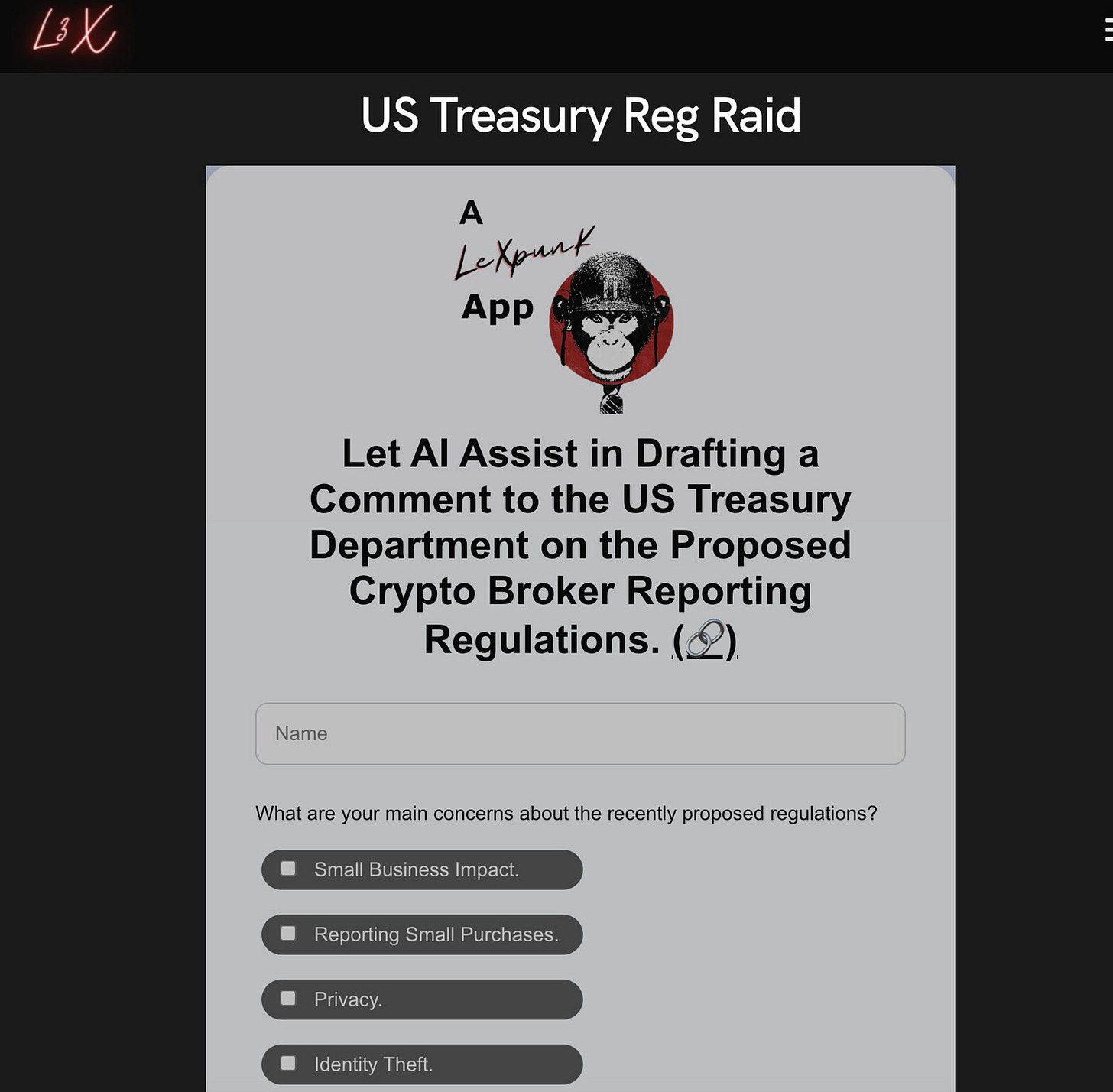

This amorphous risk of junk can also become weaponized into very specific battles. For instance, a crypto lawyer army called Lexpunk Army is “raiding” the IRS with comments on its new proposed crypto broker regime—over 120,000 have been received. Interests that don't like where government is going can now enlist robo-lobbyists to make their point or just cause mayhem.

The process is simple to generate a comment. It asks what your main concern is - everything from small business impact to poorly defined terms. It then asks you what tone you want and how much you want it amplified - inquisitive or angry. Following that it generates a comment you can submit.

The Treasury Department is now literally swimming in the Sea of Junk. To be clear, many of the comments submitted are legitimate concerns by real citizens about crypto law and should be treated as such. But many of the comments are autogenerated repetitions designed to overwhelm the government and make rulemaking more challenging. I wrote about these dynamics in GeoLegal Weekly #12 on robo lawyers so I won’t recap it here except to say that it’s not only happening in a big way, but it will force some pretty serious issues about whether to deprioritize things that give citizens voice in a world of rising and numerous fake voices all around us. This can be a countervailing force against welcoming more AI into the legal system and the policymaking process.

Toward the Singularity or the Sea of Junk?

The upshot of all of this is that we’re in for a pretty disjointed medium-term. And the future will not be evenly distributed.

More and more tech will become available in the US and Europe to assist those that don’t currently have access to lawyers as well as corporations who just want to win more often and more efficiently. And there is lots of money to be made by deploying that technology for investors, legal tech companies and the big tech companies who will benefit when their models are deployed in the legal sector. As a result, I think there are sufficiently concentrated benefits to bet that regulatory reform will go through in big ways that will open up these possibilities.

That will allow us to see what the technology can do - and the technology itself will produce stunning results. After all, in China, the use of AI-assisted judging and the availability of case-predicting robots has been around for years (see my piece for Above the Law here), so it’s not hard to imagine that oncoming applications of generative AI will be breathtaking.

But then there’s the Sea of Junk. The big insight in this concept is not garbage in, garbage out. It’s that the reaction function of politicians and regulators will come into play during the initial waves of “garbage out.” One or two examples of ChatGPT hallucinations in court have led to all sorts of disclosure rules. What will the first handful of horror stories of sympathetic people losing in court due to the reliance on automated advice lead to? Or the first examples of AI-powered courts making the wrong decisions due to malfunctions? Or making legally sound decisions without empathy or extenuation for circumstances in cases that garner public support?

And that, of course, rests on cultural norms and citizen voice. In places like China, this is less of a constraining factor and so if we’re taking a global view, I think we’ll be headed toward the legal singularity in less democratic systems much faster. In more democratic systems, we’ll see backlash and pressure against automaticity of the law.

That will lead to a moderation. As with so much innovation, our road to the legal singularity in Western countries will be two steps forward, one step back. The singularity may be near —but “near” may be a moving target.

In Other News

Lunch with former Supreme Court Justice Stephen Bryer: The FT sits down with the former justice over uninspiring cuisine. Bryer talks about how recent Court decisions seem less informed by current public opinion which risks undermining respect for the law over all - a theme readers of this Weekly will note is a frequent one for me.

McKinsey and the Chinese Government: E. Leigh Dance posted an article on LinkedIn that I had missed, in which McKinsey seems to distance itself from a prior McKinsey China website that discussed work the consulting firm purportedly did with the Chinese government. This risk underscores the fact that client selection is increasingly important in a politicized world - what I called Guilt by Representation in my 2024 Outlook. Working with the Chinese government ten years ago was fair game and a logical function of Chinese companies becoming increasingly extended arms of the state. But now McKinsey could lose out on US government contracting for that past work. For law firms - are clients you are choosing to represent today going to cause you negative consequences in the future?

-SW

Fascinating Sean. I imagine a blend of Minority Report and Boston Legal here, though perhaps with Denny Crane more of a Captain Kirk.